Google continues to introduce imaging tools that warp the idea of what a photograph is – a single frame that captures a moment in time, as the image sensor saw it.

Through Magic Eraser, you can remove items and people from the frame and AI will fill in the space it leaves.

Magic Editor will change the sky to make it look like a sunset or a bright and sunny morning. Google calls it a way to “Reminagine Your Photos”.

With Add Me, you can now appear in photos you weren’t in. If this was in the 1960s, we might have had the shooter behind the Grassy Knoll on Dealey Plaza.

Look, these images are no longer photographs. They’re artificially generated images based on photographs and shouldn’t be presented as the real deal, even if it’s just a case of improving the aesthetics for social media clout.

While talented Photoshop users have been able to acomplish such feats for ages, the ability to just tap a couple of buttons in AI makes photographic forgery a mass market tool and Google has a responsibility to place transparancy at the forefront.

OnePlus Nord 3 5G drops to new low

Get this 5G phone for the lowest price yet. The OnePlus Nord 3 5G is down to £295.

- Amazon

- Was £319

- Now £296

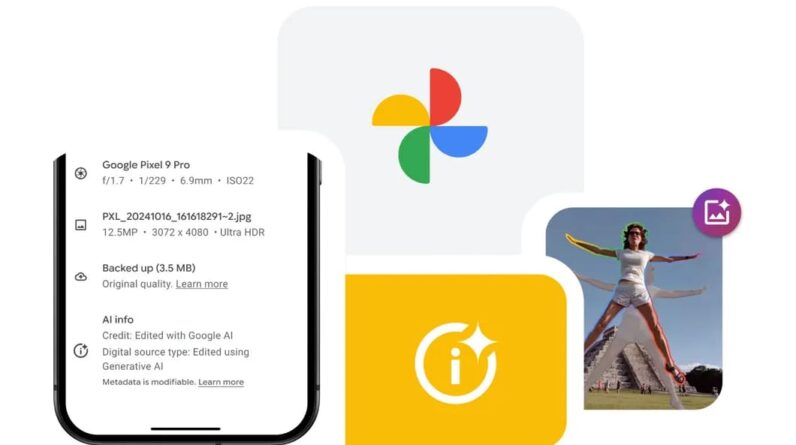

Now, via its Google Photos app, at least the company will do a slightly better job of telling you when one of these generated images is not a captured photograph, presented authentically.

In a blog post today, the company writes: “To further improve transparency, we’re making it easier to see when AI edits have been used in Google Photos. Starting next week, Google Photos will note when a photo has been edited with Google AI right in the Photos app.”

While the company says the metadata for photos already indicates if generative AI had been used, Google says it is taking things further by making information available alongside the “file name, location and backup status in the Photos app.”

It’s still not really that obvious, because who regularly delves down below the fold when they’re browsing Google Photos? It’s not exactly a label on the image itself is it? Like a watermark that makes it clear to all, not everything is as it seems in this photo.

Google seems relucatant, almost as if its worried about undermining its own clever feature, so is doing the minimum possible.

At a time of rampant misinformation, where a key spreader of misinformation runs a massive social network and amplifies it on a daily basis, we need more from forthrightness Google.

The US presidential election is less than two weeks away, and an AI-altered image could alter the fate of the planet.

Google is quick enough to roll out these tools with the abandon of the fabled kid with the key to the candy store, without putting the requisite guardrails in place.

Now the company is saying “here’s a bit more fine print” and it’s not really enough. Do better.